Been reflecting on code to write when I’m on the train. I figure the best thing I can do is write code to help my NoSQL brethren. Here’s what I plan to do…

The great thing about writing open source code for your day job is that you have to be very targeted. I only spend time writing code when it saves me time and helps us win deals.

Since I started at Pentaho, now Hitachi Vantara, nearly one year ago I’ve been passionate about pushing on NoSQL integration. The NoSQL market is, after all, equal in size to the Hadoop market at around $500m per year.

If you compare combined revenues for Hadoop players, they are roughly equal to the combined revenues of the NoSQL companies. Problem is of course, there are really only three hadoop vendors, and about 15 decent NoSQL vendors! So the NoSQL market is more fragmented.

This has an interesting side effect – NoSQL companies are too small to invest much R&D in tooling (rather than APIs) to get data in and out, to incorporate machine learning engines (“doing more with your data”), and creating easy to use analytics applications on top.

Happily enough, that is a side effect Pentaho software can definitely help with. In the last 4 months alone I’ve been asked directly by a dozen customers and prospects about integration with different NoSQL databases. I know many colleagues are having similar requests.

Happily, as they are live opportunities I can spend a day writing a basic connection plugin to systems we don’t currently support, or whose Pentaho Marketplace community connectors are a little bit out of date for. (Yes a day – it’s really easy to extend Pentaho Data Integration!)

In the last few months I’ve written basic working connectors for:-

- NoSQL:-

- MarkLogic Server – Primarily for an existing publishing customer

- AWS DynamoDB – A manufacturing prospect with an IoT use case

- ArangoDB – For a partner, for use potentially in Financial Services clients

- Other data stores:-

- Hitachi Content Platform (HCP) – our very own Object Store in Hitachi Vantara (I also had to create a HCP Java API wrapper for REST for this)

- Google BigQuery for bulk data loading and data streaming in to BigQuery

Here’s an image of what functions these currently provide:-

“Ah!” I hear you say, “but they’re basic connectors! I bet they’re crappy!” – Well, they’re not fully featured using all the features of the underlying database (yet…), but they do support tens of thousands of record operations (on average executing around 3500 per second on my little laptop). They support connection pooling, batching, and asynchronous streaming where supported. So they’re pretty serious connectors.

Pentaho also currently has community connectors on the marketplace for:-

- MongoDB

- Cassandra (and DataStax Enterprise)

- Neo4j

- Memcached

Here are the current, used, community steps available in Pentaho:-

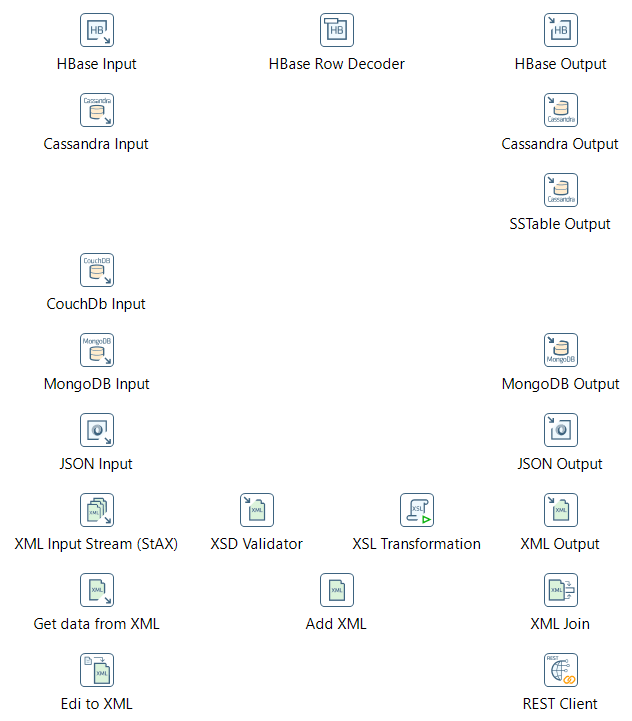

Here’s also a picture of the Out Of The Box (OOTB) NoSQL relevant steps in Pentaho – useful stuff:-

I’ve also had lots of chats internally with fellow Sales Engineers about other systems people would like connectors for. I’ve come to the conclusion that as a minimum people need to:-

- Scan a database or collection and read all the records and/or metadata

- Fetch a named record/document by id

- Write a named record/document by id (or autogen a record id on creation)

There are also some niceties people like to have:-

- Be able to restrict a scan by using a (usually much more efficient) query mechanism (Most document databases)

- Delete a named record/document by id

- Invoke a data service, if provided by the platform (like Foxx in ArangoDB)

- Write custom metadata about a record, if supported by the platform (like in Hitachi Content Platform – HCP)

What’s next for Pentaho and NoSQL?

We’re only getting asked for more integrations by customers – all of these linked to real projects, with real software revenue for us. (I don’t get out of bed for less than a $250k deal, after all)

Interestingly, giving the basic connectors away (They’re all Apache 2.0 licensed!) seems like a great way to generate interest and be certain to be down selected and then win business. Customers like it when you can react quickly to needs – not having a connector isn’t an issue, so long as you can prove how quickly it is to build one.

If you don’t have a connector though, and can’t show how quick you can build one, then you lose the deal right there, and right then, in the first face-to-face meeting.

We make our money by providing the Pentaho Enterprise Platform which can do a whole lot more than just move data around. From data preparation, data quality fixes, through to feature engineering, data science analysis orchestration, predictive maintenance, and self service dashboarding/analytics, and more besides – there’s plenty of paid-for functionality to warrant a significant license revenue spend.

I’ve been asked recently about new or improved connectivity for:-

- MongoDB

- Neo4j

- MarkLogic Server (Triple Store side, and query support)

- Cassandra

- Accumulo

- CosmosDB (aka DocumentDB and Tables)

I’d also personally like to add (customer requests permitting):-

- Redis (cos it’s crazy fast)

- Riak (cos it’s died and people need to migrate off of it)

Given I’ve written 3 NoSQL connectors in the last 3 months, I’m pretty certain we can get all the top 15 in the db-engines rankings supported in 2018! That’s the goal anyway, so keep an eye out for all our connectors!

I’m confident I’ll make Pentaho the de-facto and best choice for NoSQL ETL, Data Science, and Analytics! (If it isn’t already!)

If you are a potential customer and would like to see a new system added, please do get in touch with us in the Pentaho team within Hitachi Vantara.